A Tale of Two Courses

It Was the Best of Instruction; It Was the Worst of Instruction

Recently, I took two partial week, instructor-led, synchronous courses through my workplace. While both courses were similar in time commitment, and skill level; the two were dichotomous in content, delivery, applicable lessons, classroom management, and authenticity. My co-worker provided me with a course evaluation form to consider as I took the course, to fill out at the end of the course, and then to discuss with our team. Due to my evaluations, and our group discussions, several courses are being audited to ensure their content aligns with our organizational goals and objectives.

While sometimes dreaded by instructors, course evaluations are important for a number of reasons: materials become outdated, new organizational objectives are created, historical organizational objectives are updated, and student/organization needs change. Ideally, evaluations would occur at least yearly for each course and instructor, or as needed when major changes occur in an organization.

As an instructional design intern, I was in the unique position to take advantage of open class seats for the two classes I evaluated. There were several benefits to taking the courses: the training was provided through our agency so I was able to take them at no cost to my employer, I improved my work education transcript, I was able practice my learning as an instructional designer, I was interested in both course subjects, and I was able to provide important feedback about the courses to my team. Conducting course evaluations is an important skill for all instructional designers to develop. Due to privacy, the dates, names and course titles will not be shared.

Evaluation Methods

When evaluating courses, it’s important to evaluate the content, rather than the personality of the trainer. There are many ways to evaluate courses, and it’s a necessary process for learning providers to administer on a regular basis. The most commonly used evaluation is Kirkpatrick’s model. The 4 levels below is from http://www.kirkpatrickpartners.com/Our-Philosophy/The-Kirkpatrick-Model.

- Level 1 Reaction: evaluates how participants respond to the training. The degree to which participants find the training favorable, engaging and relevant to their jobs.

- Level 2 Learning: measures if they actually learned the material. The degree to which participants acquire the intended knowledge, skills, attitude, confidence and commitment based on their participation in the training.

- Level 3 Behavior: considers if they are using what they learned on the job. The degree to which participants apply what they learned during training when they are back on the job.

- Level 4 Results: evaluates if the training positively impacted the organization. The degree to which targeted outcomes occur as a result of the training and the support and accountability package.

Organizations apply level 1 Reaction, and 2 Learning roughly 80% of the time. Level 3 Behavior, and Level 4 Results are used 20% of the time or less. Level 1 Reaction evaluations are simple student surveys at the end of each course. We ask all of our students to complete such surveys after each class. These surveys are sometimes referred to as reaction sheets, student surveys, or smile sheets. Level 2 Learning evaluations are often in the form of a pre- and post- test, as a way to measure student learning. Another example would be to have students work in small groups, applying what they’ve learned in the course. Level 3 Behavior evaluations should occur 3-6 months after a course has ended. In these evaluations, students will be asked if they are using what they’ve learned. Level 4 Results evaluations are used to align the learning to the business/organizational goals. For instance, if an organization had a desire for a 25% improvement in their sales force, their sales people participate in a course, and then the organization would track the outcome. This is also known as a return on investment (ROI). The course evaluation sheet that I used was a basic Level 1 Reaction, but applied as a course evaluation from an instructional designer, rather than as a student evaluation.

Accuracy of Surveys In General

The best way to get honest feedback about a course, from the student perspective, is to let them know before the training that they will be given a survey, so that they will keep it in mind during the instruction. At the end of the course, the survey should be administered by a third party (ie. without the instructor/facilitator in the room), and should be anonymous. It is important that the students do not feel pressure from the instructor when sharing their opinions about the course, and that there are no repercussions for them to share their opinions.

It can be difficult to get students to participate in surveys. Normally, internal surveys will get only about a 30% response rate. To calculate how many people you need to survey, in order to get accurate response data, there are many calculators online. Here is one by Survey Monkey: https://help.surveymonkey.com/articles/en_US/kb/How-many-respondents-do-I-need

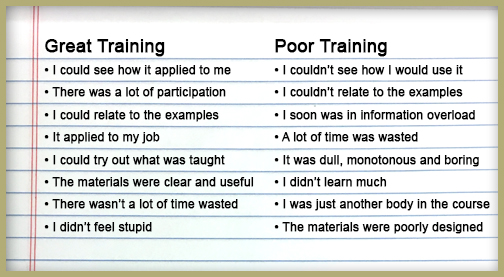

The Difference Between Great Training and Poor Training

I recently read the book Telling Ain’t Training. The general theme of the book is, “Learner-centered, Performance-based.” While much of my copy of the book is highlighted, one part of the book especially rang true when I was evaluating the two courses. Here are some of the differences in great and poor training, as listed in Telling Ain’t Training:

Example of a good training experience

Rating Scale: 1= Not at all, 5= Extremely well

Areas for possible improvement:

- Addition of job aids, other takeaways besides the book.

- Rewrite the course objectives, and discuss them in the beginning of the course.

- Opportunities for correction:

- There was a large group of learners from the same office that were unhappy with a coworker, and didn’t grasp that the course is about them, not someone else. They collectively “made a case” against the co-worker, rather than applying the learning to their own actions/behaviors.

- One student was allowed to use both a laptop and a cell phone with the ringer on. Student should have been asked to at least silence her devices so as not to distract the class.

Areas of success:

- Class was thoroughly enjoyable, and teachings were applicable to the job

- Instructor was relatable, approachable, positive and helpful

- Course materials were organized, and well conceived

- Instructor made good use of class time, and provided breaks and activities to keep the learners attention

Example of a poor training experience

Rating Scale: 1= Not at all, 5= Extremely well

Areas for possible improvement:

- Too much emphasis on concepts that don’t align with goals. Revisit organizational goals and course description objectives to ensure that materials and lecture align with those goals.

- Update all of the printed materials so that they align with the lecture

- Remove personal stories that discredit instructor completely. Revise remaining stories to be shorter, and to supplement lesson teachings.

- Provide more bio breaks, and respect learner’s time by ending class on time.

Areas of success:

- Instructor provided candy and coffee

- Instructor was easy to hear, and was available during breaks and after class for questions

- Instructor seemed like a genuine and nice person, was approachable, and smiled easily

Acknowledgements:

Telling Ain’t Training, Harold D. Stolovitch, Erica J. Keeps, copyright 2002 by the American Society for Training & Development, Harold and Erica.